ChatGPT, an artificial intelligence-fueled chatbot released in November, is changing the ways teachers educate students, scientists trust research and journalists report the news. It passed the U.S. Medical Licensing Exam and an exam to receive a business master’s degree.

UC Berkeley researchers say this isn’t a major turning point for AI technologically. Rather, they say, the release is a milestone for public awareness of current AI capabilities. It also presents an opportunity to engage the public in considering AI’s potential role in society moving forward.

“We have to look ahead to better prepare for what are likely to be increasingly powerful, yet flawed, systems in the near future with even more profound implications for society,” said Jessica Newman, director of Berkeley’s Artificial Intelligence Security Initiative and a research fellow for the Center for Long-Term Cybersecurity (CLTC).

Since the 1950s, researchers have worked to build “intelligent machines,” a concept the public is familiar with through everything from the television show “Westworld” to tools like the AI writing assistant Grammarly. But ChatGPT is on a different scale, spurring concerns about white-collar job displacement, the ethics of certain applications, disinformation and more.

“It's in some ways accelerating people's understanding of AI and what it is going to mean for them in the future,” said Hanlin Li, a postdoctoral scholar at CLTC who studies data scraping and data privacy issues. “This is such a valuable opportunity for us to shift our perspective.”

Raising public awareness and shifting mindsets

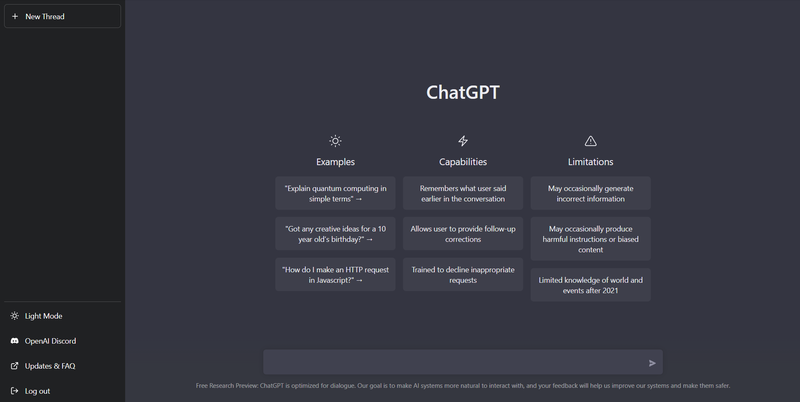

OpenAI has for years been developing the large language model underpinning ChatGPT such that the chatbot “interacts in a conversational way” with users. Since 2020, the language technology that learns from human feedback has powered hundreds of applications, Newman said.

Now, researchers hope media coverage can encourage the public to consider their role in and the cost of advancing ChatGPT and similar technology. For example, Li expressed concern for journalists and content creators whose work is – “without their awareness or consent” – training this general purpose AI system that could then be used to “automate some part of their job.”

“These AI firms are essentially conducting a form of labor theft or wage theft by getting journalists’ content or by getting artists’ content without compensating them. All of these efforts are helping companies produce profits,” Li said. “It's becoming more and more clear that we don't have a good understanding of how profitable or valuable our data is.”

Newman also said that users are providing value to OpenAI by experimenting with ChatGPT. Users are showing the company what its tool is capable of and helping identify its significant limitations, she said.

Fostering conversations around the role we’re playing in training AI is important, Li said. Often users jump at the opportunity to use free AI services without realizing their data is worth more than the service itself, she said.

With a more advanced version of OpenAI’s technology expected later this year, Newman said policymakers must set parameters around acceptable data use and governance. She recently wrote a white paper to help organizations develop trustworthy AI.

“This is such a good opportunity to raise public awareness and shift this mindset,’” Li said. We need to “provide some transparency around our data’s value to AI technologies, so we can have a more informed conversation about what's a fair value exchange between us as data producers and companies.”

Learn more about ChatGPT from our experts

- Hanlin Li wrote in WIRED: “ChatGPT stole your work. So what are you going to do?”

- Deborah Raji in The Verge: “Top AI conference bans use of ChatGPT and AI language tools to write academic papers”

- Stuart Russell in Le Point: “Human-level artificial intelligence will be ubiquitous”

- Deborah Raji in WIRED: “ChatGPT, Galactica, and the progress trap”